When it comes to software development, the cat’s out of the bag – failing to implement key security activities throughout has earned insecure software top prize with hackers. Malicious attacks resulting from the exploitation of vulnerabilities are on the rise and are no longer limited to large enterprises. SMBs are popular targets of cyberattacks and ransomware. Further, vulnerabilities are no longer just found in security-sensitive software and applications. They are found in things like cars, point-of-sale (POS) systems, and medical devices.

Developers are often blamed for security vulnerabilities, but that may be an unfair assessment. More often than not, security barely has a pulse in an organization’s Software Development Lifecycle (SDLC), let alone the plethora of developer-specific tasks that need to be conducted. This lack of organizational commitment to security is producing a steady stream of DevZombies, developers doing exactly what they are “told” to do and unknowingly aiding and abetting hackers.

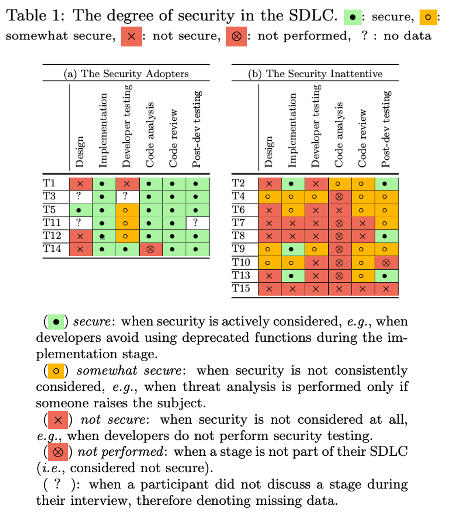

Findings from a Carlton University study supports dozens of other similar (and more current) research. I like the Carlton study for a few reasons. First, it demonstrates the often-forgotten variable in securing software – what developers “think” they are responsible for. Secondly, they break down the developer role into 6 discrete functions (as shown in Table 1 below) – Design, Implementation, Developer Testing, Code Analysis, Code Review, Post-Development Testing.

Carlton talked to 15 development teams in the industry to understand steps taken to ensure the security of their applications (labeled T1, T2, T3…..T15). Participants self-selected into two distinct cohorts. The first is called Security Adopters, which considers security in at least 4 of the 6 development functions. The second group, which barely considers security, is labeled Security Inattentive. This group followed practices (or did not follow any at all) that led to poor security approaches. Given the recent adoption of DevOps and CI/CD trends, one could create even more sub-categories for developers, which would make the situation even bleaker.

- In the design stage, most developers indicated that their teams did not view security as part of their charter. This may be because developers focus mainly on functional design tasks or lack the expertise to consider security during design.

- In the implementation stage, developers show general awareness of security. However, many participants in this study state they are not responsible for security and are not required to secure their applications. Some even state their companies do not expect them to have any software security knowledge. For the most part, though, security is a priority during implementation. Most companies, in general, encourage following best practices for secure coding and use reliable tools to assist.

- In the developer testing stage, security is lacking. The main priority is functionality. Developers are held most accountable and blamed if they do not fulfill functionality requirements. In this case, security is an afterthought at best and certainly not considered an aspect of software quality.

- In the code analysis stage, teams/developers typically have some form of mandatory security analysis requirement. Those who do have mandatory code analysis note they use commercial static analysis tools before the code is passed along. Most treat security as a secondary objective and use SAST to verify conformity to industry standards. Developers who use SAST note an overwhelming number of false positives and irrelevant warnings. This mitigates enthusiasm for SAST in developers, many of whom prefer another team does it.

- In the code review stage, most Security Adopters say that security is a primary component of this stage. This is a mostly manual step and often done in peer groups. Those in the Security Inattentive group either do not consider security at this stage or consider it informal. The Security Inattentive focuses on code efficiency instead.

- In the post-development testing stage, security is more of a concern. Often there is a collaboration between the development and the testing and/or security teams here (the latter teams do the testing and report to developers.) Most companies have an independent team (e.g., InfoSec, pen testers) to test the application as a whole. Some companies do not consider security at this stage and instead often focus on functionality, performance, and other quality analysis. Having limited resources and fewer employees, smaller companies may rely on a single person to handle security. Many developers use company size to justify their lack of security practice (“Our company is small, so hackers won’t target us”).

Security is a complex problem that extends beyond developers. Sometimes a developer lacks security knowledge, but it is also true that developers’ supervisors dismiss security. In the areas where a task/function (i.e., reliability) was a clear expectation, they prioritized it.

Factors Affecting Security Practices

- Division of labor. Some teams violate defense-in-depth best practices because applying security in each SDLC stage conflicts with their team members’ roles and responsibilities. Further, developers are solely responsible for the functional aspect in some teams, and testers (or InfoSec) are responsible for security testing.

- Security knowledge. A developers’ lack of security knowledge results in lax security practices. In many cases, even if developers are trained, they remain focused mainly on their primary functional task and not security.

- Company culture. Is security part of the company culture? Is it recognized at the highest levels? Do you commit to ongoing training?

- Resource availability. Budget issues, few employees who have the skills to perform security tasks, etc.

- External pressure. An overseeing entity such as a client or regulatory body can drive teams to adopt security practices.

- Experiencing a security incident. This may lead to increased security practices and awareness and is a growing trend at the C-suite and board level.

In reality, security practices are markedly different from best practices identified in literature, standards, and other resources. Developers inherently want to build resilient software, but they need the know-how and to be put on a path to success by management. This analysis suggests that the problem often begins within a company’s hierarchies where they don’t formally document and declare what specific aspects of security are the developer’s responsibility.